ComputerVision

13. コンピュータビジョン

13.1. 概要

コンピュータが最初に開発されたとき,コンピュータの内部と外部とのやりとりの唯一の方法は,人々が配線をするかタイピングするかによる入力を通して行われていました.今日のディジタル機器にはカメラ,マイクやその他のセンサーがついています.それらのセンサーはプログラムを通して,私たちが住んでいる世界を自動的に認識することができます.カメラからの画像を処理して,その中に興味ある情報を探し出すことをコンピュータビジョンと呼ばれています.

コンピュータの処理能力が増加し,コンピュータ自体の大きさが小さくなり,そしてより先進的なアルゴリズムが開発されたことで,コンピュータビジョンはアプリケーションの幅を大きく広げることができました.コンピュータビジョンは,一般には健康管理,セキュリティや製造業などの分野で使われていますが,私たちの日常生活の中におけるますますの利用に対する研究も進められています.

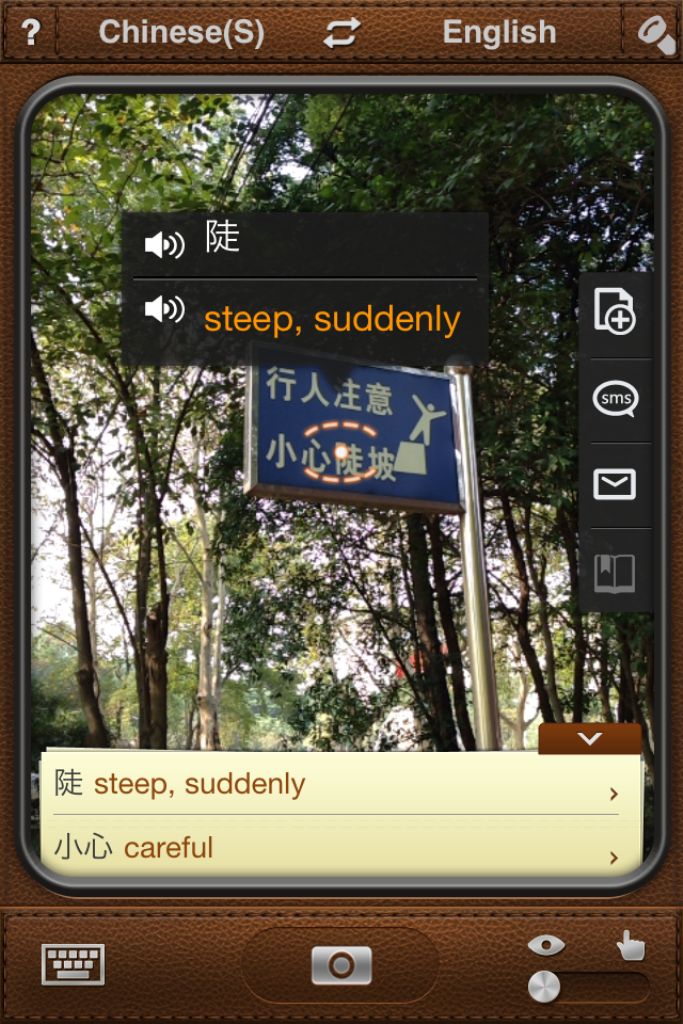

例えば,ここに中国語で書かれたサインがあります.

もし中国語を読めない場合はどうするかというと,実は中国語を読み取りを助けてくれるスマートフォン用のアプリがあるのです.

文字を「見る」ことができて,翻訳することができる小さな携帯機器を持つことは,旅行者にとって大きな違いを生じさせることになります.注意して見てほしいのですが,翻訳されているのは最後の2文字だけで,これは「たばこを吸う」という意味です.実は,最初の2文字は「〜しないでください」という意味ですから,もし最後の2文字だけの翻訳がすべてだと思ったら,逆の意味に取る可能性もあるわけです.

中国語を認識することはいつも完璧というわけではないのです.ここに警告のサインがあります.

私のスマートフォンでは,2行目の最初の3文字までは認識しましたが最後の1文字は認識していません.どうしてこのようなことが起こるのでしょうか?

ユーザーにコンピュータビジョンを通して詳細な情報を与えることは,話の中の一部分にすぎません.現実の世界から情報を取り出すことは,コンピュータに私たちの補助を他の方法でさせるということなのです.すでにコンピュータビジョンはドライバーに衝突防止に役立たせたり,他の車に接近しすぎている時とか,前方の路上に障害物がある時とかに警告をだしたりする機能が実現化されています.また,コンピュータビジョンを地図ソフトウェアと連動させることで,目的地まで行くのに人間のドライバーを必要としない車も今日では作られています.車椅子の誘導装置はコンピュータビジョンを利用することで,ドアにぶつかることを避けることができるようになり,誰にとっても操作性が格段に向上しました.

13.2. Lights, Camera, Action!

Digital cameras and human eyes fulfill largely the same function: images come in through a lens and are focused onto a light sensitive surface, which converts them into electrical impulses that can be processed by the brain or a computer respectively. There are some differences, however.

Human eyes have a very sensitive area in the centre of their field of vision called the fovea. Objects that we are looking at directly are in sharp detail, while our peripheral vision is quite poor. We have separate sets of cone cells in the retina for sensing red, green and blue (RGB) light, but we also have special rod cells that are sensitive to light levels, allowing us to perceive a wide dynamic range of bright and dark colours. The retina has a blind spot (a place where all the nerves bundle together to send signals to the brain through the optic nerve), but most of the time we don’t notice it because we have two eyes with overlapping fields of view, and we can move them around very quickly.

Digital cameras have uniform sensitivity to light across their whole field of vision. Light intensity and colour are picked up by RGB sensor elements on a silicon chip, but they aren’t as good at capturing a wide range of light levels as our eyes are. Typically, a modern digital camera can automatically tune its exposure to either bright or dark scenes, but it might lose some detail (e.g. when it is tuned for dark exposure, any bright objects might just look like white blobs).

It is important to understand that neither a human eye nor a digital camera — even a very expensive one — can perfectly capture all of the information in the scene in front of it. Electronic engineers and computer scientists are constantly doing research to improve the quality of the images they capture, and the speed at which they can record and process them.

Curiosity: Further reading

Further reading can be found at Cambridge in Colour and Pixiq.

13.3. Noise

One challenge when using digital cameras is something called noise. That’s when individual pixels in the image appear brighter or darker than they should be, due to interference in the electronic circuits inside the camera. It’s more of a problem when light levels are dark, and the camera tries to boost the exposure of the image so that you can see more. You can see this if you take a digital photo in low light, and the camera uses a high ASA/ISO setting to capture as much light as possible. Because the sensor has been made very sensitive to light, it is also more sensitive to random interference, and gives photos a “grainy” effect.

Noise mainly appears as random changes to pixels. For example, the following image has “salt and pepper” noise.

Having noise in an image can make it harder to recognise what’s in the image, so an important step in computer vision is reducing the effect of noise in an image. There are well-understood techniques for this, but they have to be careful that they don’t discard useful information in the process. In each case, the technique has to make an educated guess about the image to predict which of the pixels that it sees are supposed to be there, and which aren’t.

Since a camera image captures the levels of red, green and blue light separately for each pixel, a computer vision system can save a lot of processing time in some operations by combining all three channels into a single “grayscale” image, which just represents light intensities for each pixel.

This helps to reduce the level of noise in the image. Can you tell why, and about how much less noise there might be? (As an experiment, you could take a photo in low light — can you see small patches on it caused by noise? Now use photo editing software to change it to black and white — does that reduce the effect of the noise?)

Rather than just considering the red, green and blue values of each pixel individually, most noise-reduction techniques look at other pixels in a region, to predict what the value in the middle of that neighbourhood ought to be.

A mean filter assumes that pixels nearby will be similar to each other, and takes the average (i.e. the mean) of all pixels within a square around the centre pixel. The wider the square, the more pixels there are to choose from, so a very wide mean filter tends to cause a lot of blurring, especially around areas of fine detail and edges where bright and dark pixels are next to each other.

A median filter takes a different approach. It collects all the same values that the mean filter does, but then sorts them and takes the middle (i.e. the median) value. This helps with the edges that the mean filter had problems with, as it will choose either a bright or a dark value (whichever is most common), but won’t give you a value between the two. In a region where pixels are mostly the same value, a single bright or dark pixel will be ignored. However, numerically sorting all of the neighbouring pixels can be quite time-consuming!

A Gaussian blur is another common technique, which assumes that the closest pixels are going to be the most similar, and pixels that are farther away will be less similar. It works a lot like the mean filter above, but is statistically weighted according to a normal distribution.

13.3.1. Activity: noise reduction filters

Open the noise reduction filtering interactive using this link and experiment with settings as below. You will need a webcam, and the widget will ask you to allow access to it.

Mathematically, this process is applying a special kind of matrix called a convolution kernel to the value of each pixel in the source image, averaging it with the values of other pixels nearby and copying that average to each pixel in the new image. The average is weighted, so that the values of nearby pixels are given more importance than ones that are far away. The stronger the blur, the wider the convolution kernel has to be and the more calculations take place.

For your project, investigate the different kinds of noise reduction filter and their settings (mask size, number of iterations) and determine:

- images/20grid.pnghow well they cope with different kinds and levels of noise (you can set this in the interactive).

- images/20grid.pnghow much time it takes to do the necessary processing (the interactive shows the number of frames per second that it can process)

- how they affect the quality of the underlying image (a variety of images + camera)

You can take screenshots of the image to show the effects in your writeup. You can discuss the tradeoffs that need to be made to reduce noise.

13.4. Face recognition

Recognising faces has become a widely used computer vision application. These days photo album systems like Picasa and Facebook can try to recognise who is in a photo using face recognition — for example, the following photos were recognised in Picasa as being the same person, so to label the photos with people’s names you only need to click one button rather than type each one in.

There are lots of other applications. Security systems such as customs at country borders use face recognition to identify people and match them with their passport. It can also be useful for privacy — Google Maps streetview identifies faces and blurs them. Digital cameras can find faces in a scene and use them to adjust the focus and lighting.

There is some information about How facial recognition works that you can read up as background, and some more information at i-programmer.info .

There are some relevant articles on the cs4fn website that also provide some general material on computer vision.

13.4.1. Project: Recognising faces

First let’s manually try some methods for recognising whether two photographs show the same person.

- Get about 3 photos each of 3 people

- Measure features on the faces such as distance between eyes, width of mouth, height of head etc. Calculate the ratios of some of these.

- Do photos of the same person show the same ratios? Do photos of different people show different ratios? Would these features be a reliable way to recognise two images as being the same person?

- Are there other features you could measure that might improve the accuracy?

You can evaluate the effectiveness of facial recognition in free software such as Google’s Picasa or the Facebook photo tagging system, but uploading photos of a variety of people and seeing if it recognises photos of the same person. Are there any false negatives or positives? How much can you change your face when the photo is being taken to not have it match your face in the system? Does it recognise a person as being the same in photos taken several years apart? Does a baby photo match of a person get matched with them when they are five years old? When they are an adult? Why or why not does this work?

Use the following face recognition interactive to see how well the Haar face recognition system can track a face in the image. What prevents it from tracking a face? Is it affected if you cover one eye or wear a hat? How much can the image change before it isn’t recognised as a face? Is it possible to get it to incorrectly recognise something that isn’t a face?

Open the face recognition interactive using this link and experiment with the settings. You will need a webcam, and the widget will ask you to allow access to it.

13.5. Edge detection

A useful technique in computer vision is edge detection, where the boundaries between objects are automatically identified. Having these boundaries makes it easy to segment the image (break it up into separate objects or areas), which can then be recognised separately.

For example, here’s a photo where you might want to recognise individual objects:

And here’s a version that has been processed by an edge detection algorithm:

Notice that the grain on the table above has affected the quality; some pre-processing to filter that would have helped!

You can experiment with edge-detection yourself. Open the following interactive, which provides a Canny edge detector (see the information about Canny edge detection on Wikipedia ). This is a widely used algorithm in computer vision, developed in 1986 by John F. Canny.

Open the edge detection interactive using this link and experiment with settings as below. You will need a webcam, and the widget will ask you to allow access to it.

13.5.1. Activity: Edge detection evaluation

With the canny edge detection interactive above, try putting different images in front of the camera and determine how good the algorithm is at detecting boundaries in the image. Capture images to put in your report as examples to illustrate your experiments with the detector.

- Can the Canny detector find all edges in the image? If there are some missing, why might this be?

- Are there any false edge detections? Why did they system think that they were edges?

- Does the lighting on the scene affect the quality of edge detection?

- Does the system find the boundary between two colours? How similar can the colours be and still have the edge detected?

- How fast can the system process the input? Does the nature of the image affect this?

- How well does the system deal with a page with text on it?

13.6. The whole story!

The field of computer vision is changing rapidly at the moment because camera technology has been improving quickly over the last couple of decades. Not only is the resolution of cameras increasing, but they are more sensitive for low light conditions, have less noise, can operate in infra-red (useful for detecting distances), and are getting very cheap so that it’s reasonable to use multiple cameras, perhaps to give different angles or to get stereo vision.

Despite these recent changes, many of the fundamental ideas in computer vision have been around for a while; for example, the “k-means” segmentation algorithm was first described in 1967, and the first digital camera wasn’t built until 1975 (it was a 100 by 100 pixel Kodak prototype).

(More material will be added to this chapter in the near future)