ComplexityAndTractability

11. 計算の複雑さと扱いやすさ

11.1.概要

コンピュータにとっても難しすぎる問題ってあるのでしょうか?その答えは”Yes” です.

このあと「人工知能」の章で,単におしゃべりをすることがコンピュータが得意ではないことが分かります.その理由は,コンピュータが話せないからではなく,気の利いたことを理解したり考えたりすることができないからです.ですが,これは,この章でいう「難しい」問題ではありません.コンピュータが会話ができないとうことではなく,私たちがどのようにおしゃべりをしているのかを私たち自身が分かっていない,つまり,私たちがコンピュータにどうすればよいか説明できないのです.

この章では,例えばプログラムを書くことで,何をすればよいかコンピュータに簡単に説明できるのに,あまりにも時間がかかる,そう何百万世紀もかかるので,私たちが望んでいることをコンピュータができないという問題を扱います.高性能のコンピュータを手に入れるだけでは十分ではありません.100倍速いコンピュータでも,何百万年もかかります.このような問題を「難しい」問題といいます.考えうる最速のコンピュータを使っても人生の何倍も時間がかかるような問題のことです.扱いやすい(逆に,手に負えない)問題に関する分野では,入力のサイズが非常に小さな場合をのぞいて,現実的ではないほど手間がかかる問題やアルゴリズムを探求します.「扱いやすい」で何を意味するか後ほど定義しますが,乱暴に言うと,扱いやすい問題とは現実的な時間で終了するプログラムを書ける問題のことで,手に負えない問題とは一般にとてつもなく時間がかかるプログラムしか書けない問題のことです.

このような困難な問題の一つを解こうとするときにそのことを知っていることはとても重要です.さもなければ,その問題を解く良い方法を見つけようとして時間を浪費するばかりで,得るものは何もありません.コンピュータ科学者は,手に負えない問題であると認識出来る必要があります.そうすれば,人々は他の方法を取ることができます.このようなときにとられる一般的な方法は,完全な解答を得るのを諦め,代わりに近似解を得ることにすることです.困難な問題に対して良い近似解を得る技法には様々なものがあります.厳密な解に対するどのくらい解が近いか保証されない方法は,しばしばヒューリスティクスと呼ばれます.

この章で扱う手に負えない問題の重要な例の1つがトラベリングセールスマン問題 (travelling salesman problem, TSP) です.これは,次のような分かりやすい問題です.あなたがこれから訪れたい場所があり,あなたはこれらの場所のどの組についてもそれらの場所の間の距離を知っています.ことのとき,すべての場所をちょうど1回ずつ訪れる最短経路を見つけましょうという問題です.配送業者がどのような順番で荷物を配達するか,ロックバンドがどのような順番でツアーをするのか,パーティーの後にお酒を飲まなかった人が友だちをどのような順番で送り届けるのかなどの現実的な問題です.実は,都市間の距離は,実際の距離である必要はありません.都市間を移動するのに支払う料金でもよいのです.例えば,Queenstown, Christchurch, Auckland, Wellington といった都市を訪れる必要があるとき,航空運賃が一番安くなるように移動する順番を考えるのも TSP の例です.

次の対話的なシステムは,都市数を指定して地図を作成すると,その地図上のすべての都市をたどる道順を順番に試していき,それまでに見つけた一番よい道順を記録することで TSP を解くプログラムです.この対話的システムで都市数を変えて色々と試すことで,この問題が手に負えないということを体感できるはずです.まずは,都市の数を 5 にして地図を作って,”開始" をクリックして問題を解いてみましょう.

★コンテンツは準備中です★

次は,都市数を 10 (2倍) にして実行してみましょう.実行時間も2倍になるでしょうか? さらに2倍 (20都市) にするとどうなるでしょうか? 50 都市だと? どのくらいかかるか推察してみましょう.手に負えない問題の意味が分かり始めたのではないでしょうか.

もちろん,ある状況では,手に負えない問題にも良い点があります.とくに,セキュリティや暗号アルゴリズムは手に負えない問題を活用しています.暗号を破ることができますが,何十億年のかかるので意味がありません.実際,このような問題に対する高速なアルゴリズムが発見されたら,多くのコンピュータセキュリティシステムは,あっという間に役に立たないものになるでしょう.ですので,コンピュータ科学者の役割に,このような解法が存在しないことを確かなものにすることも含まれます.

この章では,TSP やその他の効率の良い解法が知られていない問題,コンピュータ使って解くのに何百万世紀かかる問題について学びます.そして,現代のコンピュータ科学の最大のミステリーに出会うことになります.それは,これらの問題をもっと効率的に解く方法があるのかどうか誰も知らないということです.実は良い方法があるのに誰も知らないだけかもしれませんし,本当に良い方法がないのかもしれません.まだ,分かっていないのです.

まずは,実際に解くことができ親しみやすい問題から始めましょう.

11.2. Algorithms, problems, and speed limits

Complexity is an important concept with problems and algorithms that solve them. Usually complexity is just the amount of time it takes to solve a problem, but there are several ways that we can measure the “time”. Using the actual time on a particular computer can be useful, but to get a rough idea of the inherent behaviour of an algorithm, computer scientists often start by estimating the number of steps the algorithm will take for n items. For example, a linear search can end up checking each of n items being searched, so the algorithm will take n steps. An algorithm that compares every pair of values in a list of n items will have to make n2 comparisons, so we can characterise it as taking aboutn2 steps. This gives us a lot of information about how good an algorithm is without going into details of which computer it was running on, which language, and how well written the program was. The term complexity is generally used to refer to these rough measures.

Having a rough idea of the complexity of a problem helps you to estimate how long it’s likely to take. For example, if you write a program and run it with a simple input, but it doesn’t finish after 10 minutes, should you quit, or is it about to finish? It’s better if you can estimate the number of steps needs to make, and then extrapolate from the time it takes other programs to find related solutions.

Jargon Buster: Asymptotic complexity

If you’re reading about complexity, you may come across some terminology like “Big Oh” notation and “asymptotic complexity”, where an algorithm that takes about n2 steps is referred to as O(n2). We won’t get into these in this chapter, but here’s a little information in case you come across the terms in other reading. “Big Oh” notation is a precise way to talk about complexity, and is used with “asymptotic complexity”, which simply means how an algorithm performs for large values of n. The “asymptotic” part means as n gets really large — when this happens, you are less worried about small details of the running time. If an algorithm is going to take seven days to complete, it’s not that interesting to find out that it’s actually 7 days, 1 hour, 3 minutes and 4.33 seconds, and it’s not worth wasting time to work it out precisely.

We won’t use precise notation for asymptotic complexity (which says which parts of speed calculations you can safely ignore), but we will make rough estimates of the number of operations that an algorithm will go through. There’s no need to get too hung up on precision since computer scientists are comfortable with a simple characterisation that gives a ballpark indication of speed.

For example, consider using selection sort to put a list of n values into increasing order. (This is explained in the chapter on algorithms). Suppose someone tells you that it takes 30 seconds to sort a thousand items. Does that sounds like a good algorithm? For a start, you’d probably want to know what sort of computer it was running on - if it’s a supercomputer then that’s not so good; if it’s a tiny low-power device like a smartphone then maybe it’s ok.

Also, a single data point doesn’t tell you how well the system will work with larger problems. If the selection sort algorithm above was given 10 thousand items to sort, it would probably take about 50 minutes (3000 seconds) — that’s 100 times as long to process 10 times as much input.

These data points for a particular computer are useful for getting an idea of the performance (that is, complexity) of the algorithm, but they don’t give a clear picture. It turns out that we can work out exactly how many steps the selection sort algorithm will take for n items: it will require about n(n-1)/2 operations, or in expanded form,n2/2 - n/2 operations. This formula applies regardless of the kind of computer its running on, and while it doesn’t tell us the time that will be taken, it can help us to work out if it’s going to be reasonable.

From the above formula we can see why it gets bad for large values of n : the number of steps taken increases with the square of the size of the input. Putting in a value of 1 thousand for n tells us that it will use 1,000,000/2 - 1,000/2 steps, which is 499,500 steps.

Notice that the second part (1000/2) makes little difference to the calculation. If we just use the n2/2 part of the formula, the estimate will be out by 0.1%, and quite frankly, the user won’t notice if it takes 20 seconds or 19.98 seconds. That’s the point of asymptotic complexity — we only need to focus on the most significant part of the formula, which contains n2

Also, since measuring the number of steps is independent of the computer it will run on, it doesn’t really matter if it’s described as n2/2 orn2. The amount of time take will be proportional to both of these formulas, so we might as well simplify it to n2. This is only a rough characterisation of the selection sort algorithm, but it tells us a lot about it, and this level of accuracy is widely used to quickly but fairly accurately characterise the complexity of an algorithm. In this chapter we’ll be using similar crude characterisations because they are usually enough to know if an algorithm is likely to finish in a reasonable time or not.

If you’ve studied algorithms, you will have learnt that some sorting algorithms, such as mergesort and quicksort, are inherently faster than other algorithms, such as insertion sort, selection sort, or bubble sort. It’s obviously better to use the faster ones. The first two have a complexity of n log(n) time (that is, the number of steps that they take is roughly proportional to n log(n)), whereas the last three have complexity of n2. Generally the consequence of using the wrong sorting algorithm will be that a user has to wait many minutes (or perhaps hours) rather than a few seconds or minutes.

Here we’re going to consider another possible sorting algorithm, called permutation sort. Permutation sort says “Let’s list all the possible orderings (“permutations”) of the values to be sorted, and check each one to see if it is sorted, until the sorted order is found”. This algorithm is straightforward to describe, but is it any good?

For example, if you are sorting the numbers 45, 21 and 84, then every possible order they can be put in (that is, all permutations) would be listed as:

45, 21, 84

45, 84, 21

21, 45, 84

21, 84, 45

84, 21, 45

84, 45, 21

Going through the above list, the only line that is in order is 21, 45, 84, so that’s the solution. It’s a very inefficient approach, but it will help to illustrate what we mean by tractability.

In order to understand how this works, and the implications, choose four different words (in the example below we have used colours) and list all the possible orderings of the four words. Each word should appear exactly once in each ordering. You can either do this yourself, or use an online permutation generator such as JavaScriptPermutations or Text Mechanic.

For example if you’d picked red, blue, green, and yellow, the first few orderings could be:

red, blue, green, yellow

red, blue, yellow, green

red, yellow, blue, green

red, yellow, green, blue

They do not need to be in any particular order, although a systematic approach is recommended to ensure you don’t forget any!

Once your list of permutations is complete, search down the list for the one that has the words sorted in alphabetical order. The process you have just completed is using permutation sort to sort the words.

Now add another word. How many possible orderings will there be with 5 words? What about with only 2 and 3 words — how many orderings are there for those? If you gave up on writing out all the orderings with 5 words, can you now figure out how many there might be? Can you find a pattern? How many do you think there might be for 10 words? (You don’t have to write them all out!).

If you didn’t find the pattern for the number of orderings, think about using factorials. For 3 words, there are 3! (“3 factorial”) orderings. For 5 words, there are 5! orderings. Check the jargon buster below if you don’t know what a “factorial” is, or if you have forgotten!

Jargon Buster

Factorials are very easy to calculate; just multiply together all the integers from the number down to 1. For example, to calculate 5! you would simply multiply: 5 x 4 x 3 x 2 x 1 = 120. For 8! you would simply multiply 8 x 7 x 6 x 5 x 4 x 3 x 2 x 1 = 40,320.

As stated above, the factorial of a number tells you how many permutations (orderings) there would be for that number of words (assuming they are all different). This means that if you are arranging 8 words, there will be 40,320 ways of arranging them (which is why you weren’t asked to try this in the first exercise!!)

Your calculator may have a ”!” button for calculating factorials and spreadsheets usually have a “FACT” function, although for the factorials under 10 in this section, we recommend that you calculate them the long way, and then use the calculator as a double check. Ensuring you understand how a factorial is calculated is essential for understanding the rest of this section!

For factorials of larger numbers, most desktop calculators won’t work so well; for example, 100! has 158 digits. You can use the calculator below to work with huge numbers (especially when using factorials and exponents).

★コンテンツは準備中です★

Try calculating 100! using this calculator — that’s the number of different routes that a travelling salesman might take to visit 100 places (not counting the starting place). With this calculator you can copy and paste the result back into the input if you want to do further calculations on the number. If you are doing these calculations for a report, you should also copy each step of the calculation into your report to show how you got the result.

There are other big number calculators available online; for example, the Big Integer Calculator. Other big calculators are available online, or you could look for one to download for a desktop machine or smartphone.

As a final exercise on permutation sort, calculate how long a computer would take to use permutation sort to sort 100 numbers. Remember that you can use the calculator that was linked to above. Assume that you don’t have to worry about how long it will take to generate the permutations, only how long it will take to check them. Assume that you have a computer that creates and checks an ordering every nanosecond.

- How many orderings need to be checked for sorting 100 numbers?

- How many orderings can be checked in a second?

- How many orderings can be checked in a year?

- How many years will checking all the orderings take?

And as an interesting thing to think about, do some calculations based on the assumptions listed below. How long would it take to use permutation sort on 100 numbers? What would happen first: the algorithm would finish, or the universe would end?

- There are 1082 atoms in the universe

- The universe has another 14 billion years before it ends

- Suppose every atom in the universe is a computer that can check an ordering every nanosecond

By now, you should be very aware of the point that is being made. Permutation sort is so inefficient that sorting 100 numbers with it takes so long that it is essentially impossible. Trying to use permutation sort with a non trivial number of values simply won’t work. While selection sort is a lot slower than quick sort or merge sort, it wouldn’t be impossible for Facebook to use selection sort to sort their list of 1 billion users. It would take a lot longer than quick sort would, but it would be doable. Permutation sort on the other hand would be impossible to use!

At this point, we need to now distinguish between algorithms that are essentially usable, and algorithms that will take billions of year to finish running, even with a small input such as 100 values.

Computer Scientists call an algorithm “intractable” if it would take a completely unreasonable amount of time to run on reasonably sized inputs. Permutation sort is a good example of an intractable algorithm. The term “intractable” is used a bit more formally in computer science; it’s explained in the next section.

But the problem of sorting items into order is not intractable - even though the Permutation sort algorithm is intractable, there are lots of other efficient and not-so-efficient algorithms that you could use to solve a sorting problem in a reasonable amount of time: quick sort, merge sort, selection sort, even bubble sort! However, there are some problems in which the ONLY known algorithm is one of these intractable ones. Problems in this category are known as intractable problems.

Curiosity : Towers of Hanoi

The Towers of Hanoi problem is a challenge where you have a stack of disks of increasing size on one peg, and two empty pegs. The challenge is to move all the disks from one peg to another, but you may not put a larger disk on top of a smaller one. There’s a description of it at Wikipedia.

This problem cannot be solved in fewer than 2n-1 moves, so it’s an intractable problem (a computer program that lists all the moves to make would use at least 2n - 1 steps). For 6 disks it only needs 63 moves, but for 50 disks this would be 1,125,899,906,842,623 moves.

We usually characterise a problem like this as having a complexity of 2n, as subtracting one to get a precise value makes almost no difference, and the shorter expression is simpler to communicate to others.

The Towers of Hanoi is one problem where we know for sure that it will take exponential time. There are many intractable problems where this isn’t the case — we don’t have tractable solutions for them, but we don’t know for sure if they don’t exist. Plus this isn’t a real problem — it’s just a game (although there is a backup system based on it). But it is a nice example of an exponential time algorithm, where adding one disk will double the number of steps required to produce a solution.

11.3. Tractability

There’s a very simple rule that computer scientists use to decide if an algorithm is tractable or not, based on the complexity (estimated number of steps) of the algorithm. Essentially, if the algorithm takes an exponential amount of time or worse for an input of size n, it is labelled as intractable. This simple rule is a bit crude, but it’s widely used and provides useful guidance. (Note that a factorial amount of time, n!, is intractable because it’s bigger than an exponential function.)

To see what this means, let’s consider how long various algorithms might take to run. The following interactive will do the calculations for you to estimate how long an algorithm might take to run. You can choose if the running time is exponential (that is,2n, which is the time required for the Towers of Hanoi problem with n disks), or factorial (that is, n!, which is the time required for checking all possible routes a travelling salesman would make to visit n places other than the starting point). You can use the interactive below to calculate the time.

For example, try choosing the factorial time for the TSP, and put in 20 for the value of n (i.e. this is to check all possible travelling salesman visits to 20 places). Press the return or tab key to update the calculation. The calculator will show a large number of seconds that the program will take to run; you can change the units to years to see how long this would be.

★コンテンツは準備中です★

So far the calculation assumes that the computer would only do 1 operation per second; try changing to a million (1,000,000) operations per second, which is more realistic, and see how long that would take.

Another way to solve problems faster is to have multiple processors work on different solutions at the same time. If you were to buy 1,000 processors (e.g. 1,000 computers, or 250 4-core computers) and have each one test out different routes, then the solution could be found 1,000 times faster. Try changing the number of processors to 1,000, and see how long that would take (you may need to change the units back — is it seconds? hours? days?)

The interactive above estimates the amount of time taken for various algorithms to run given n values to be processed. Let’s assume that we have a very fast computer, faster than any that exist. Try putting in the assumption that the computer can do a million million (1,000,000,000,000) steps per second. Is that achievable? But what if you add just two more locations to the problem (i.e. n=22 instead of n=20)?

Now, consider an algorithm that has a complexity of n2 (there are lots that take roughly this number of steps, including selection sort which was mentioned earlier). Type in a value of 1,000,000 for n to see how long it might take to sort a million items on a single processor (keep the number of steps per second at 1,000,000,000,000, but set the number of processors to just 1) — it should show that it will only take about 1 second on our hypothetical very fast machine. Now put in 10 million for n — although it’s sorting a list 10 times as big, it takes more than 10 times as long, and will now take a matter of minutes rather than seconds. At what value of n does the amount of time become out of the question — that is, how large would the problem need to be for it to take years to finish? Is anyone ever likely to be sorting this many values — for example, what if for some reason you were sorting the name of every person in the world, or every base in the human genome?

What about an algorithm with complexity of n3? What’s the largest size input that it can process in a reasonable amount of 22:14:19

Now try the same when the number of steps is 2n, but start with a value of 10 for n , then try 30, 40 , 50 and so on. You’ll probably find that for an input of about 70 items it will take an unreasonable amount of time. Is it much worse for 80 items?

Now try increasing the number of operations per second to 10 times as many. Does this help to solve bigger problems?

Trying out these figures you will likely have encountered the barrier between “tractable” and “intractable” problems. Algorithms that take n2, n3 or even n4 time to solve a problem (such as sorting a list) aren’t amazing, but at least with a fast enough computer and for the size of inputs we might reasonably encounter, we have a chance of running them within a human lifetime, and these are regarded as tractable . However, for algorithms that take 2n, 3n or more steps, the amount of time taken can end up as billions of years even for fairly small problems, and using computers that are thousand times faster still doesn’t help to solve much bigger problems. Such problems are regarded as intractable . Mathematically, the boundary between tractable and intractable is between a polynomial number of steps (polynomials are formulas made up of n2, n3, n4 and so on), and an exponential number of steps (2n, 3n, 4n, and so on).

The two formulas n2 and 2n look very similar, but they are really massively different, and can mean a difference between a few seconds and many millennia for the program to finish. The whole point of this chapter is to develop an awareness that there are many problems that we have tractable algorithms for, but there are also many that we haven’t found any tractable algorithms for. It’s very important to know about these, since it will be futile to try to write programs that are intractable, unless you are only going to be processing very small problems.

Note that algorithms that take a factorial amount of time (n!, or 1 \times 2 \times 3 \times \ldots n) are in the intractable category (in fact, they take times that are a lot worse than 2n). Essentially any algorithm that tries out all combinations of the input will inevitably be intractable because the number of combinations is likely to be exponential or factorial. Thus an important point is that it’s usually not going to work to design a system that just tries out all possible solutions to see which is the best.

Although we’ve provided n6 as an example of a tractable time, nearly all algorithms you’re likely to encounter will be n3 and better, or 2n and worse — only very specialised ones fall in the gap between those. So there’s a big gulf between tractable and intractable problems, and trying to grapple with it is one of the biggest problems in computer science!

What about Moore’s law, which says that computing power is increasing exponentially? Perhaps that means that if we wait a while, computers will be able to solve problems that are currently intractable? Unfortunately this argument is wrong; intractable problems are also exponential, and so the rate of improvement due to Moore’s law means that it will only allow for slightly larger intractable problems to be solved. For example, if computing speed is doubling every 18 months (an optimistic view of Moore’s law), and we have an intractable problem that takes 2n operations to solve (many take longer than this), then in 18 months we will be able to solve a problem that’s just one item bigger. For example, if you can solve an exponential time problem for 50 items (50 countries on a map to colour, 50 cities for a salesman to tour, or 50 rings on a Towers of Hanoi problem) in 24 hours, then in 18 months you can expect to buy a computer that could solve it for 51 items at best! And in 20 years you’re likely to be able to get a computer that could solve for 55 items in one day. You’re going to have to be more than patient if you want Moore’s law to help out here — you have to be prepared to wait for decades for a small improvement!

Remember that if you need to do calculations of huge numbers, there’s a calculator here that you can use:

★コンテンツは準備中です★

11.4. The Travelling Salesman Problem

An example of an intractable problem is the Travelling Salesman Problem (TSP). The TSP involves a bunch of locations (cities, houses, airports,....) where you can travel between any possible pair of locations. The goal is to find the shortest route that will go through all the locations once — this is what the interactive at the start of this chapter does.

Researchers have spent a lot of time trying to find efficient solutions to the Travelling Salesman Problem, yet have been unable to find a tractable algorithm for solving it. As you learnt in the previous section, intractable algorithms are very slow, to the point of being impossible to use. As the only solutions to TSP are intractable, TSP is known as an intractable problem.

It hasn’t actually been proven that there is no tractable solution to TSP, although many of the world’s top computer scientists have worked on this problem for the last 40 years, trying to find a solution but without success. What they have managed to do is find thousands of other problems that are also intractable, and more importantly, if a solution is found for any one of these problems, we know how to convert it to a solution for any of the others (these are called NP-complete problems). They all stand and fall together, including the TSP problem. So it’s not just being lazy if you give up on finding an optimal TSP algorithm — people have tried for decades and not found a tractable algorithm. Of course, this is also a strong motivator to try to find one — if you do, you will have solved thousands of other problems at the same time! This is a great thing for a researcher to do, but if you have a program to get finished by the end of the month, it’s not a good bet to work on it.

Current algorithms for finding the optimal TSP solution aren’t a lot better than simply trying our all possible paths through the map (as in the interactive at the start of this chapter). The number of possible paths gets out of hand; it’s an intractable approach. In the project below you’ll be estimating how long it would take.

While TSP was originally identified as being the problem that sales people face when driving to several different locations and wanting to visit them in the order that leads to the shortest route (less petrol usage), the same problem applies to many other situations as well. Courier and delivery companies have variants of this problem — often with extra constraints such as limits on how long a driver can work for, or allowing for left hand turns being faster than right-hand ones (in NZ at least!)

Since these problems are important for real companies, it is not reasonable to simply give up and say there is no solution. Instead, when confronted with an intractable problem, computer scientists look for algorithms that produce approximate solutions — solutions that are not perfectly correct or optimal, but are hopefully close enough to be useful. By relaxing the requirement that the solution has to be perfectly correct, it is often possible to come up with tractable algorithms that will find good enough solutions in a reasonable time. This kind of algorithm is called a heuristic - it uses rules of thumb to suggest good choices and build up a solution made of pretty good choices.

A simple heuristic that often works OK is a greedy heuristic algorithm — an algorithm that just takes what looks like the best choice at each step. For example, for the TSP, a greedy heuristic algorithm might repeatedly take the route to the next closest city. This won’t always be the best choice, but it is very fast, and experience shows that it is typically no more than 25% worse than the optimal. There are more sophisticated ways of designing approximate algorithms that can do better than this (some can get within 3% of optimal for the TSP), but they take longer to run.

There are software companies that work on trying to make better and better approximate algorithms for guiding vehicles by GPS for delivery routes. Companies that write better algorithms can charge a lot of money if their routes are faster, because of all the fuel and time savings that can be made.

An interesting thing with intractability is that you can have two very similar problems, with one being intractable and the other being tractable. For example, finding the shortest route between two points (like a GPS device usually does) is a tractable problem, yet finding the shortest route around multiple points (the TSP) isn’t. By the way, finding the longest path between two points (without going along any route twice) is also intractable, even though finding the shortest path is tractable!

Project: The craypots problem [#wdf71e94]

You should present your findings for this project in a written report where you include your answers to the exercises, the maps you make, and written explanations to explain what you have found out and learnt.

This project is based around a scenario where there is a cray fisher who has around 18 craypots that have been laid out in open water. Each day the fisher uses a boat to go between the craypots and check each one for crayfish.

The cray fisher has started wondering what the shortest route to take to check all the craypots would be, and has asked you for your help. Because every few weeks the craypots need to be moved around, the fisher would prefer a general way of solving the problem, rather than a solution to a single layout of craypots. Therefore, your investigations must consider more than one possible layout of craypots, and the layouts investigated should have the craypots placed randomly i.e. not in lines, patterns, or geometric shapes.

When asked to generate a random map of craypots, get a pile of coins (or counters) with however many craypots you need, and scatter them onto an A4 piece of paper. If any land on top of each other, place them beside one another so that they are touching but not overlapping. One by one, remove the coins, making a dot on the paper in the centre of where each coin was. Number each of the dots. Each dot represents one craypot that the cray fisher has to check. You should label the top left corner or the paper as being the boat dock, where the cray fisher stores the boat.

Generate a map with 7 or 8 craypots using the random map generation method described above. Make an extra copy of this map, as you will need it again later.

Using your intuition, find the shortest path between the craypots.

Now generate a map (same method as above) with somewhere between 15 and 25 craypots. Make more than one copy of this map, as you will need it again later

Now on this new map, try to use your intuition to find the shortest path between the craypots. Don’t spend more than 5 minutes on this task; you don’t need to include the solution in your report. Why was this task very challenging? Can you be sure you have an optimal solution?

Unless your locations were laid out in a circle or oval, you probably found it very challenging to find the shortest route. A computer would find it even harder, as you could at least take advantage of your visual search and intuition to make the task easier. A computer could only consider two locations at a time, whereas you can look at more than two. But even for you, the problem would have been challenging! Even if you measured the distance between each location and put lines between them and drew it on the map so that you didn’t have to judge distances between locations in your head, it’d still be very challenging for you to figure out!

A straightforward algorithm to guarantee that you find the shortest route is to check all possible routes. This involves working out what all the possible routes are, and then checking each one. A possible route can be written as a list of the locations (i.e. the numbers on the craypots), in the order to go between them. This should be starting to sound familiar to you assuming you did the permutation sort discussed above. Just like in that activity you listed all the possible ordering for the values in the list to be sorted, this algorithm would require listing all the possible orderings of the craypots, which is equivalent (although you don’t need to list all the orderings for this project!).

How many possible routes are there for the larger example you have generated? How is this related to permutation sort, and factorials? How long would it take to calculate the shortest route in your map, assuming the computer can check 1 billion (1,000,000,000) possible routes per second? (i.e. it can check one route per nanosecond) What can you conclude about the cost of this algorithm? Would this be a good way for the cray fisher to decide which path to take?

Make sure you show all your mathematical working in your answers to the above questions!

So this algorithm is intractable, but maybe there is a more clever algorithm that is tractable? The answer is No.

You should be able to tell that this problem is equivalent to the TSP, and therefore it is intractable. How can you tell? What is the equivalent to a town in this scenario? What is the equivalent to a road?

Since we know that this craypot problem is an example of the TSP, and that there is no known tractable algorithm for the TSP, we know there is no tractable algorithm for the craypot problem either. Although there are slightly better algorithms than the one we used above, they are still intractable and with enough craypots, it would be impossible to work out a new route before the cray fisher has to move the pots again!

Instead of wasting time on trying to invent a clever algorithm that no-one has been able to find, we need to rely on a algorithm that will generate an approximate solution. The cray fisher would be happy with an approximate solution that is say, 10% longer more than the best possible route, but which the computer can find quickly.

There are several ways of approaching this. Some are better than others in general, and some are better than others with certain layouts. One of the more obvious approximate algorithms, is to start from the boat dock in the top left corner of your map and to go to the nearest craypot. From there, you should go to the nearest craypot from that craypot, and repeatedly go to the nearest craypot that hasn’t yet been checked. This approach is known as a greedy heuristic algorithm as it always makes the decision that looks the best at the current time, rather than making a not so good decision now to try and get a bigger pay off later. You will understand why this doesn’t necessarily lead to the optimal solution after completing the following exercises.

On a copy of each of your 2 maps you generated, draw lines between the craypots to show the route you would find following the greedy algorithm (you should have made more than one copy of each of the maps!)

For your map with the smaller number of craypots (7 or 8), compare your optimal solution and your approximate solution. Are they the same? Or different? If they are the same, would they be the same in all cases? Show a map where they would be different (you can choose where to place the craypots yourself, just use as many craypots as you need to illustrate the point).

For your larger map, show why you don’t have an optimal solution. The best way of doing this is to show a route that is similar to, but shorter than the approximate solution. The shorter solution you find doesn’t have to be the optimal solution, it just has to be shorter than the one identified by the approximate algorithm (Talk to your teacher if you can’t find a shorter route and they will advise on whether or not you should generate a new map). You will need to show a map that has a greedy route and a shorter route marked on it. Explain the technique you used to show there was a shorter solution. Remember that it doesn’t matter how much shorter the new solution you identify is, just as long as it is at least slightly shorter than the approximate solution — you are just showing that the approximate solution couldn’t possibly be the optimal solution by showing that there is a shorter solution than the approximate solution.

Even though the greedy algorithm only generates an approximate solution, as opposed to the optimal solution, explain why is it more suitable for the cray fisher than generating an optimal solution would be?

Why would it be important to the cray fisher to find a short route between the craypots, as opposed to just visiting them in a random order? Discuss other problems that are equivalent to TSP that real world companies encounter every day. Why is it important to these companies to find good solutions to TSP? Estimate how much money might a courier company be wasting over a year if their delivery routes were 10% worse than the optimal. How many different locations/towns/etc might their TSP solutions have to be able to handle?

Find a craypot layout that will result in the greedy algorithm finding the shortest route. How do you know it is the shortest route? What is a general pattern that seem to work well for this greedy algorithm?

Find a craypot layout that results in the greedy algorithm finding what seem to be a really inefficient route. Why is it inefficient? Don’t worry about trying to find an actual worst case, just find a case that seems to be quite bad. What is a general pattern that seem to make this greedy algorithm inefficient?

Don’t forget to include an introductory paragraph in your report that outlines the key ideas. It should include a brief description of what an intractable problem is, and how a computer scientist goes about dealing with such a problem. The report should also describe the Travelling Salesman Problem and the craypot problem in your own words. Explain why the craypot problem is a realistic problem that might matter to someone.

11.5. Other intractable problems

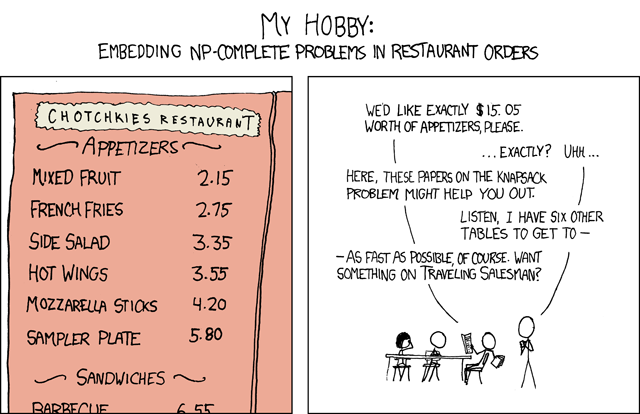

There are thousands of problems like the TSP for which no tractable solution is known. Extra sections will eventually be added here to introduce some of them, but in the meantime, if you are keen you might like to explore some of these problems:

- map and graph colouring (these can be reduced to a timetabling problem and vice versa, showing how NP-complete problems can relate to each other)

- the knapsack problem

- the bin packing problem

- Hamiltonian paths (no tractable solution for this is known, yet the very similar Eulerian path, which is often presented as the seven bridges problem, has an easy tractable solution)

- Steiner trees

- Dominating sets

- Longest path (this is interesting because finding the longest path is intractable, yet finding the shortest path is tractable - the shortest path is calculated when a GPS device works out the shortest route to a destination. Also, a Hamiltonian problem can be reduced easily to longest path, showing the concept of reduction when one NP-complete problem is used to solve another). And here’s a song about it! https://www.youtube.com/watch?feature=player_embedded&v=a3ww0gwEszo

- the Battleship problem

11.6. The whole story!

The question of tractability is a big one in computer science — in fact, what is widely regarded as the biggest unsolved problem in computer science revolves around it. You may recall that we mentioned that there are thousands of problems that are we don’t have a tractable solution for, yet a tractable solution to one can be adapted to all the others. This groups of problems is called “NP-complete” (NP stands for non-deterministic polynomial if you really want to know; complete just means that they can all be converted to each other!) The big question is whether or not there is a polynomial time algorithm for any one of them, in which case all NP problems will have a P (polynomial time) solution. The question is often referred to as whether or not P equals NP.

Actually, things get worse. So far we’ve talked about intractable problems — ones that can be solved, but might need billions of years on a computer. If you think it’s bad that some problems take that long to solve, that’s nothing! There are some well known problems that we know can never be solved on a computer. For example, writing a program that reliably tells you if another program will finish or not is impossible! There are other examples of such problems here: - http://www.cs4fn.org/algorithms/tiles.php - http://www.cs4fn.org/algorithms/uncomputable.php - http://www.cs4fn.org/algorithms/haltingproblem.php

It’s good to know about these issues, to avoid getting stuck writing impossible programs. It’s also a fascinating area of research with opportunities to make a discovery that could change the world of computing, as well as contribute to our understanding on what can and can’t be computed.

11.7. Further reading

This topic is covered very thoroughly in a way that is accessible to non-specialists in a popular book by David Harel called “Computers Ltd.: What They Really Can’t Do”.

11.7.1. Useful Links

- http://en.wikipedia.org/wiki/Computational_complexity_theory

- http://www.tsp.gatech.edu/games/index.html

- http://csunplugged.org/graph-colouring

- http://en.wikipedia.org/wiki/Travelling_salesman_problem

- http://en.wikipedia.org/wiki/Knapsack_problem

- http://en.wikipedia.org/wiki/Bin_packing_problem

- http://en.wikipedia.org/wiki/Hamiltonian_path

- http://en.wikipedia.org/wiki/Brute-force_search